Biography

I have always stood out for my responsibility, perseverance, excellent academic results, and good technical and research skills. In this way, I have achieved several academic recognitions, among which Erasmus Mundus scholarship and ANID scholarship awarded by the Chilean government to do a PhD in computer science. Currently, I am PhD Candidate at IALab group PUC, passionate about computer vision. I’m trying to figure out how to give the machines and artificial agents the ability to reason and see the world as human beings do. Inspired by this, I am focusing on learning to recognize novel human actions with few samples and multimodal information like humans do.

Download my resumé.

- Video Understanding

- Few-Shot Learning

- Continual Learning

- Class Incremental Learning

- Human Action Classification

-

PhD in Computer Science, (March 2019 - Present)

Pontificia Universidad Católica de Chile

-

Bs. Electronic Engineering, (February 2012 - March 2017)

Universidad del Norte, Barranquilla

-

Academic Exchange, (September 2015 - July 2016)

Universidad Politécnica de Madrid

Experience

Main topics:

- Class incremental learning for action classification

- Continual learning

Main topics:

- Few-Shot learning for video understanding

- Multimodal models

- Human action recognition

- Attention

- Transductive inference

Functions:

- Teach the principal motivations behind the tasks of Video Understanding and Visual question answering and how these tasks have been addressed

- Explain inductive artificial intelligence techniques such as SVM, Random Forest, Naive Bayes, Neural Networks, Deep Learning, etc

- Prepare exercises for the students

- Solve doubts about the laboratories and grade these

- Courses: Artificial Intelligence, Introduction to programming, Deep Learning, Recommender Systems, Visual Question Answering, Video understanding

Functions:

- Develop new features for cross-platform applications which are designed for vaccine management using frameworks like Ionic

- Develop new web applications and maintain existing ones, both frontend, and backend parts

- Deploy the mobile solutions in the Play Store for Android devices and the App Store for iOS devices

Featured Publications

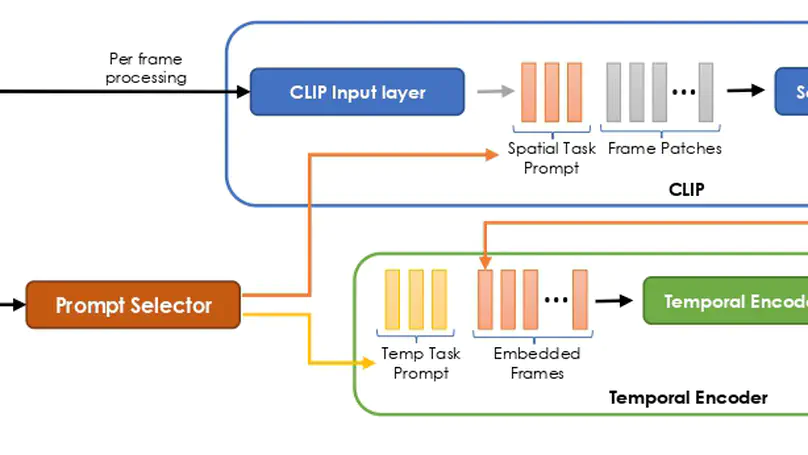

We introduce PIVOT, a novel method that leverages the extensive knowledge in pre-trained models from the image domain, thereby reducing the number of trainable parameters and the associated forgetting. Unlike previous methods, ours is the first approach that effectively uses prompting mechanisms for continual learning without any in-domain pre-training. Our experiments show that PIVOT improves state-of-the-art methods by a significant 27% on the 20-task ActivityNet setup.

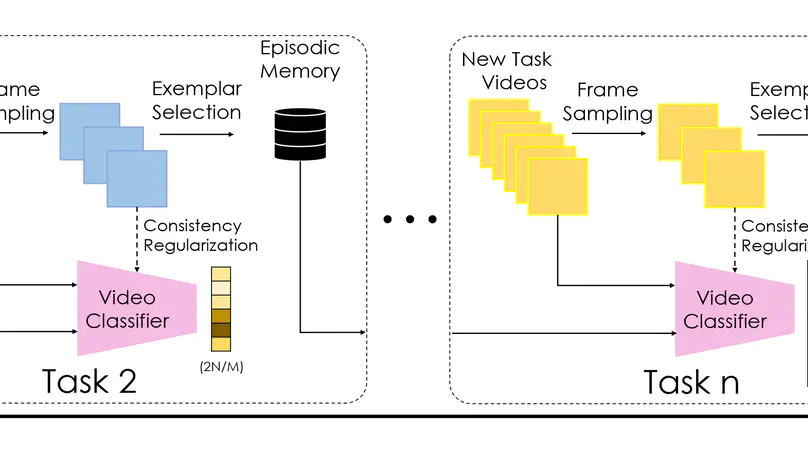

vCLIMB is a standardized test-bed to analyze catastrophic forgetting of deep models in video continual learning. We perform in-depth evaluations of existing CL methods in vCLIMB, and observe two unique challenges in video data. The selection of instances to store in episodic memory is performed at the frame level. Second, untrimmed training data influences the effectiveness of frame sampling strategies.

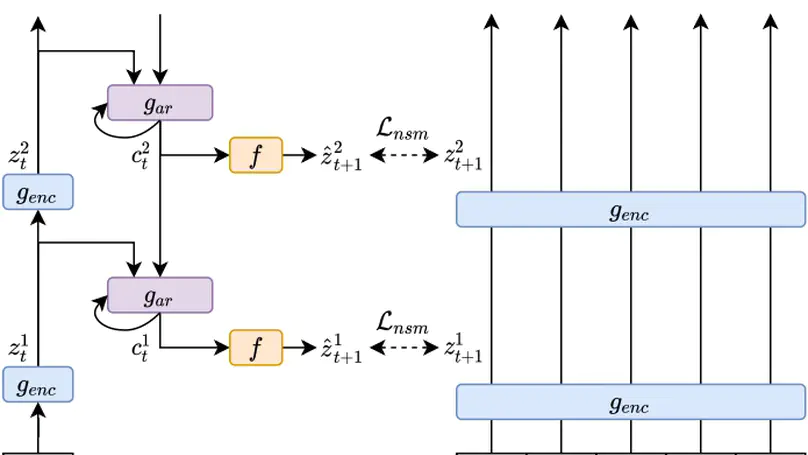

In this work, we propose to use ideas from predictive coding theory to augment BERT-style language models with a mechanism that allows them to learn suitable discourse-level representations.

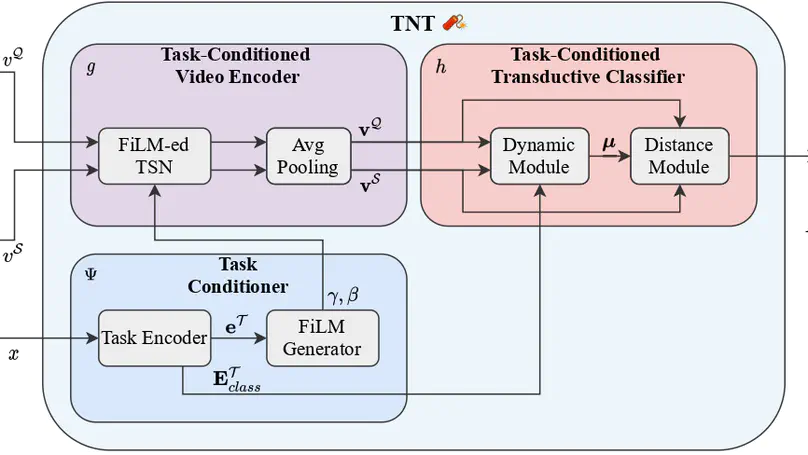

In this paper, we propose to leverage these human-provided textual descriptions as privileged information when training a few-shot video classification model. Specifically, we formulate a text-based task conditioner to adapt video features to the few-shot learning task.

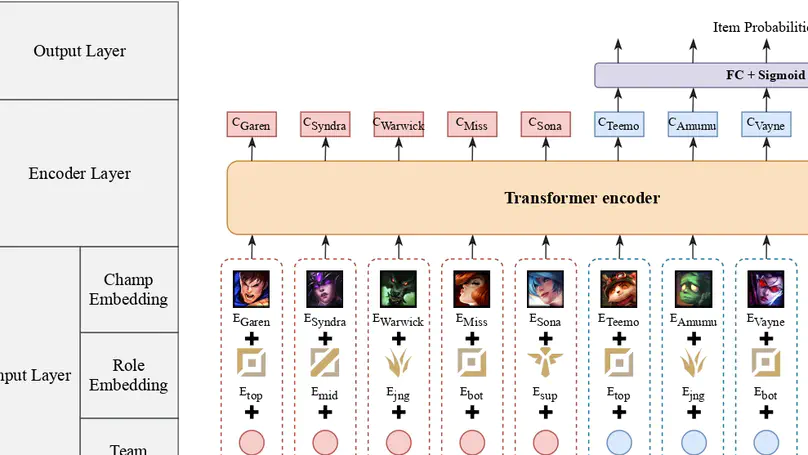

We develop TTIR, a contextual recommender model derived from the Transformer neural architecture that suggests a set of items to every team member, based on the contexts of teams and roles that describe the match. TTIR outperforms several approaches and provides interpretable recommendations through visualization of attention weights.